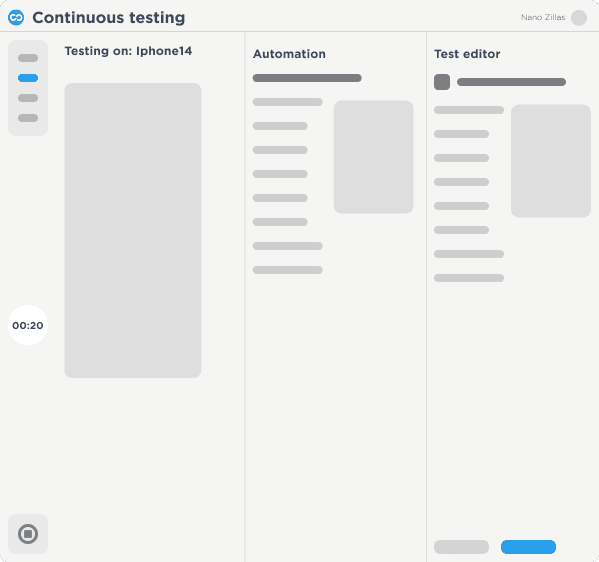

Continuous Testing

Continuously deliver flawless customer experiences at speed with scalable web and mobile testing

Scalable Web and Mobile Testing

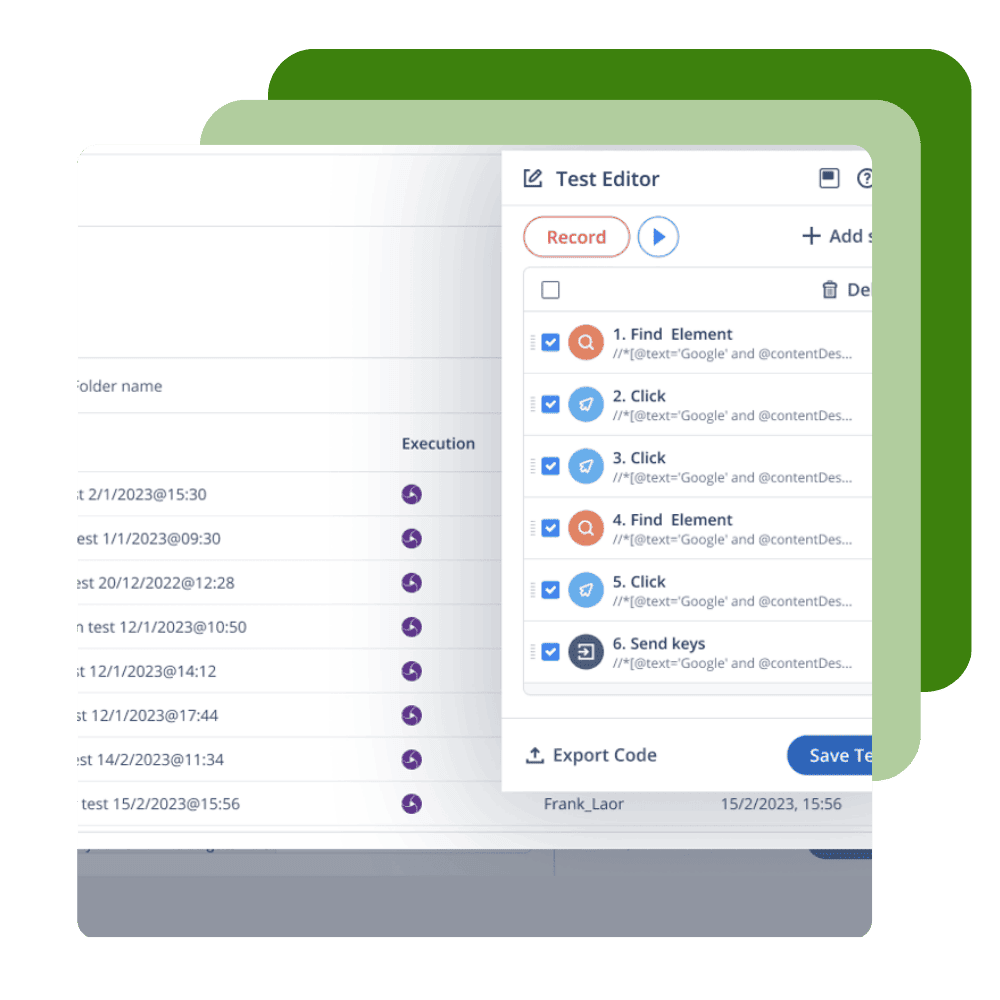

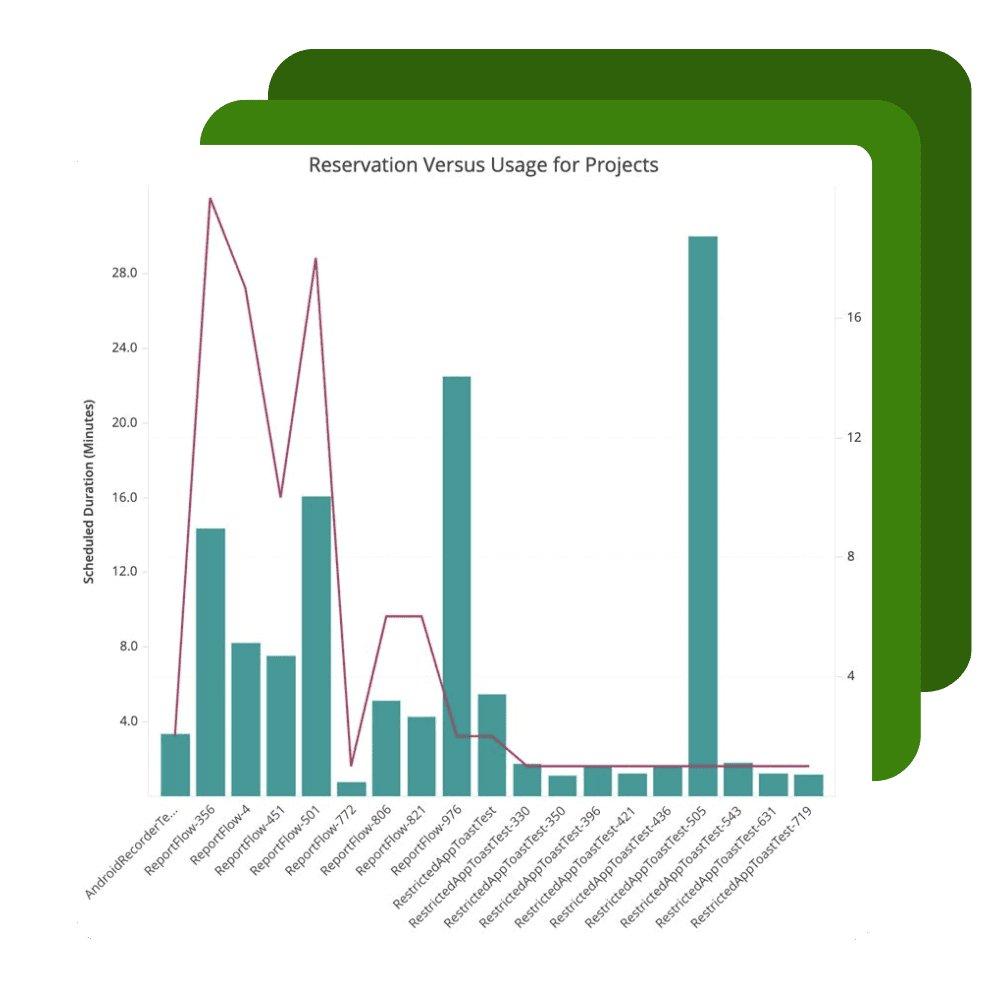

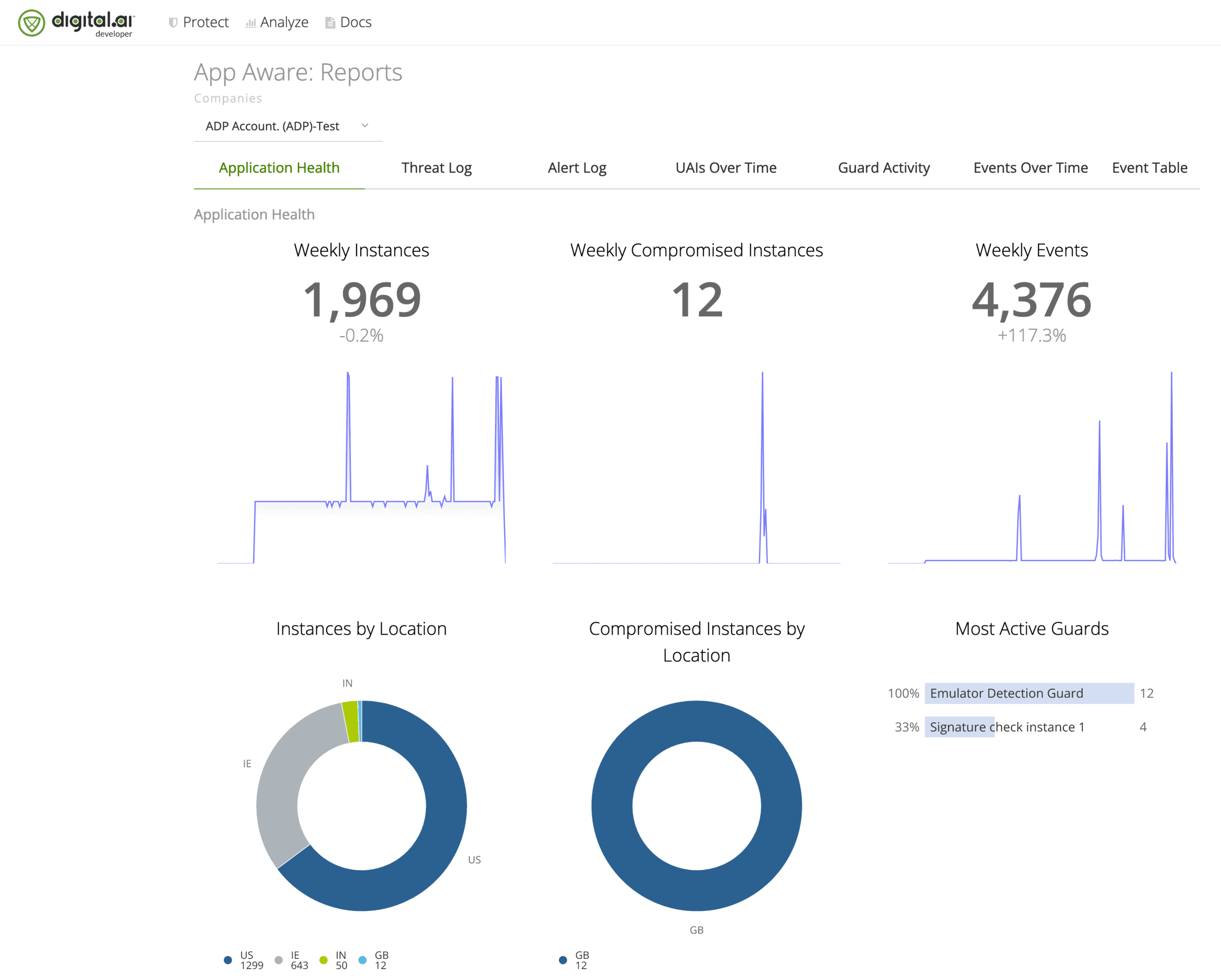

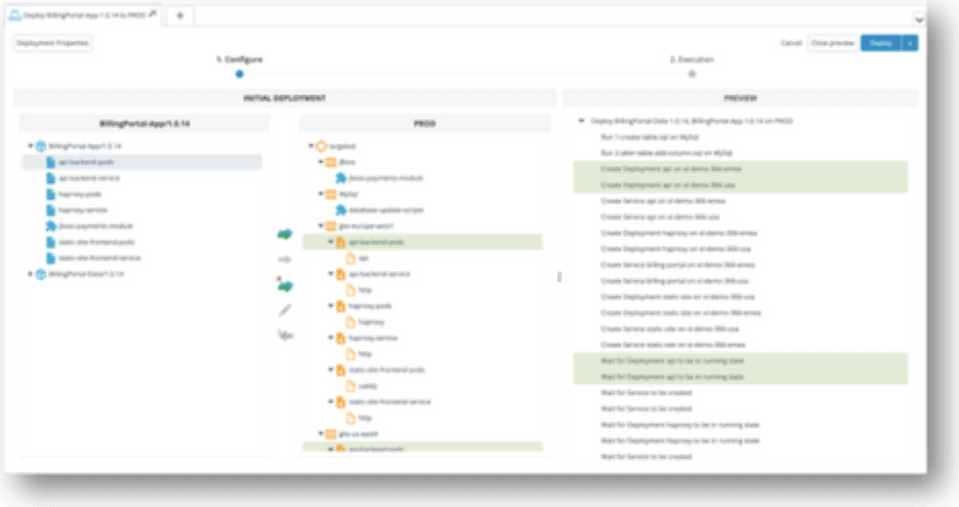

Digital.ai Continuous Testing is a scalable web and mobile application testing solution that increases test coverage and helps organizations make data-driven decisions. It helps testing teams execute functional, performance, and accessibility scenarios at scale and incorporates simplified AI-Powered test creation that allows any team member to generate automated scripts.

Trusted By Enterprise Customers

Continuously test your web and mobile apps with enterprise-grade security, scalability, and visibility

Capabilities

Give your DevOps teams easy access to thousands of real devices, virtual devices and browsers wherever they are.

Related Resources

BLOGS

How AI and ML are Revolutionizing Web and Mobile Automated Testing

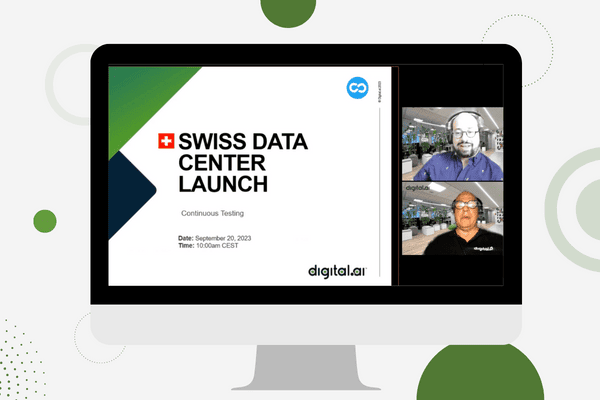

WEBINARS

Continuous Testing – Swiss Data Center

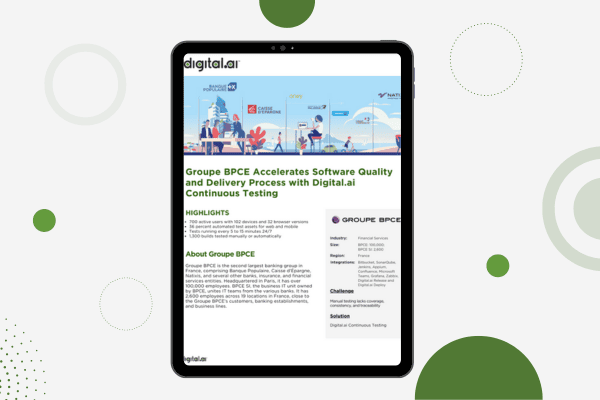

CASE STUDIES

Groupe BPCE Accelerates Software Quality and Delivery Process with Digital.ai Continuous Testing

WEBINARS

How Test Management Ensures Agile Requirements Meet Testing Results

See Digital.ai Continuous Testing in Action

We have helped thousands of teams across industries configure, implement, and optimize Digital.ai Continuous Testing for error-free apps at scale. Contact us to learn more.